EMOTHAW: A Novel Database for Emotional State Recognition from Handwriting and Drawing

in collaboration with

Overview

The EMOTional State Recognition from HAndwriting and DraWing (EMOTHAW) contains digitalized data of handwritten Italian text acquired by an INTUOS WACOM series 4 digitizing tablet and a special writing device named Intuos Inkpen.

It can be used to train and test handwritten text recognizers and to perform writer identification and verification experiments.

The database was first published in [Likforman16] on IEEE Transactions on Human-Machine Systems (see below).

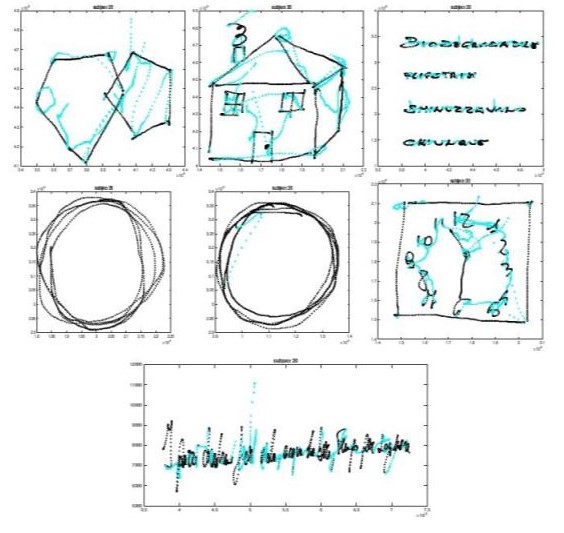

It contains a collection of more than 129 handwriting and drawing samples dataset and includes 7 tasks:

- Copy of a two-pentagon drawing

- Copy of a house drawing

- Writing of four Italian words in capital letters (BIODEGRADABILE (biodegradable), FLIPSTRIM (flipstrim), SMINUZZAVANO (to crumble), CHIUNQUE (anyone))

- Loops with left hand

- Loops with right hand

- Clock drawing test

- Writing of the following phonetically complete Italian sentence in cursive letters (I pazzi chiedono fiori viola, acqua da bere, tempo per sognare: Crazy people are seeking for purple flowers, drinking water and dreaming time).

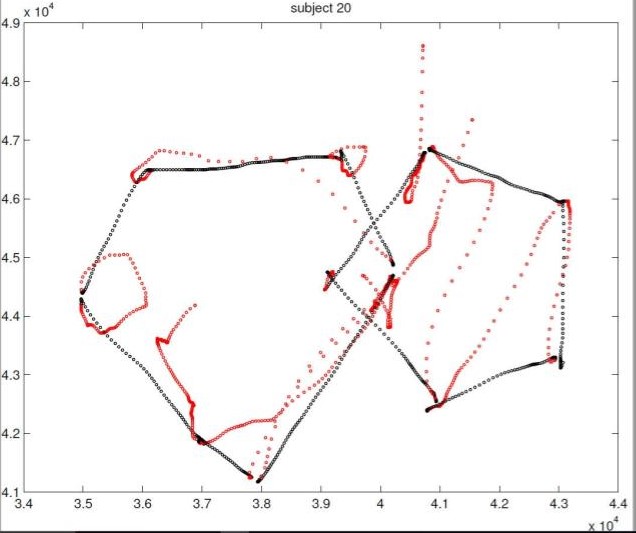

An example is depicted in the following figure

Data have been registered thanks to an INTUOS WACOM series 4 digitizing tablet and a special writing device named Intuos Inkpen. This device provides high spatial and pressure accuracies and can be considered as a state-of-the-art tablet.

Participants were required to write on a sheet of paper (DIN A4 normal paper) laid on the tablet.

The resulting files are svc files, svc being the file extension provided by Wacom. Svc files are ASCII files that can be opened with WORD, NOTEPAD and other standard editor applications.

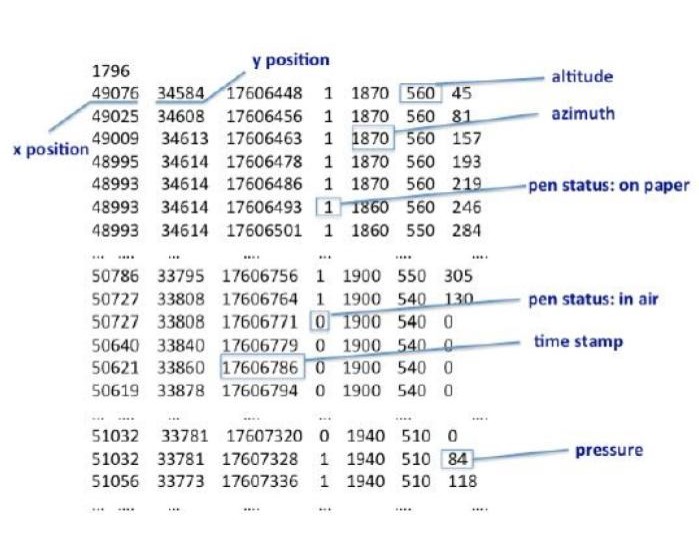

The following information is captured:

- Position in x-axis.

- Position in y-axis.

- Time stamp

- Pen status

- Azimuth angle of the pen with respect to the tablet

- Altitude angle of the pen with respect to the tablet

- Pressure applied by the pen.

An example of the available data follows:

3444

50962 34188 16718871 1 1950 620 15

50961 34188 16718893 1 1950 620 100

50959 34191 16718908 1 1950 620 154

50957 34194 16718923 1 1950 620 218

50958 34192 16718916 1 1950 620 190

...

Pictures

|

|

|

Download

An example of dataset is available at the following link

The whole dataset is available on request. Fill the the form availabel at the following link. We will send you a link to download the dataset

Contacts

If you have any questions or suggestions, please contact Questo indirizzo email è protetto dagli spambots. È necessario abilitare JavaScript per vederlo., Questo indirizzo email è protetto dagli spambots. È necessario abilitare JavaScript per vederlo., Questo indirizzo email è protetto dagli spambots. È necessario abilitare JavaScript per vederlo., Questo indirizzo email è protetto dagli spambots. È necessario abilitare JavaScript per vederlo., Questo indirizzo email è protetto dagli spambots. È necessario abilitare JavaScript per vederlo.

Terms of Use

This EMOTHAW database may be used for non-commercial research purpose only. If you are publishing scientific work based on the EMOTHAW database, we request you to include a reference to our database.

Publications

- Laurence Likforman-Sulem, Anna Esposito, Marcos Faundez-Zanuy, Stéphan Clémençon and Gennaro Cordasco, "EMOTHAW: A Novel Database for Emotional State Recognition from Handwriting and Drawing". To appear in IEEE Transactions on Human-Machine Systems.

Citing Emothaw

We encourage you to cite our work if you have used our dataset (bibtex)

Use the following BibTeX citation for the Emothaw paper:

Use the following BibTeX citation for the Emothaw paper:

@Article{Likforman16,

author = {Laurence Likforman-Sulem and Anna Esposito and Marcos Faundez-Zanuy and Stéphan Clémençon and Gennaro Cordasco},

title = {EMOTHAW: A Novel Database for Emotional State Recognition from Handwriting and Drawing},

journal = {IEEE Transactions on Human-Machine Systems},

year = {2016},

}

Use the following BibTeX citation for the Emothaw dataset:

@Misc{emothawData16,

author = {Laurence Likforman-Sulem and Anna Esposito and Marcos Faundez-Zanuy and Stéphan Clémençon and Gennaro Cordasco},

title = {EMOTHAW: A Novel Database for Emotional State Recognition from Handwriting and Drawing},

howpublished = {https://sites.google.com/site/becogsys/emothaw},

year = {2016},

}